ChatGPT is a cutting-edge artificial intelligence (AI) tool that has the potential to revolutionize the way we produce written content. We may very soon see writers using it as their own personal assistants to generate ideas, publishers using it to generate content effortlessly, and programmers using it to write pieces of code quickly.

However, the quality of the output generated by ChatGPT is only as good as the data it was trained on, as well as the effectiveness of the prompts provided to it. And since it was trained on text written by humans, ChatGPT reflects our collective societal biases, including the sets of beliefs or “narratives” that shape how we think, talk about, and attempt to address social issues like poverty. Many of these narratives include flawed thinking about class and race that could be spread further through AI. This is especially true since the content generated with the help of AI might be used again to retrain ChatGPT or other similar large language models (LLMs) in the future.

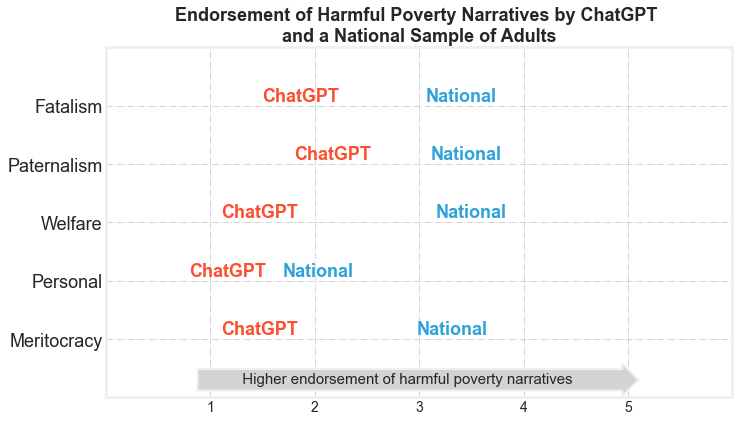

Harmful poverty narratives are stereotypes and misconceptions about people living in poverty that can perpetuate discrimination and stigma. As part of our narrative change work, the ideas42 Economic Justice team identified five key harmful narratives commonly used to understand poverty: fatalism, paternalism, welfare, personal, and meritocracy. To analyze the prevalence of these narratives, we ran multiple national surveys assessing individual attitudes toward poverty and created scores for each poverty narrative. Each narrative was evaluated on a scale from 1 to 5: 1 meant strong disagreement with a given harmful narrative, while 5 indicated strong agreement.

Then, to understand how biased the content generated by ChatGPT is compared to the average person, we asked the model the same questions. We were pleasantly surprised to see that ChatGPT scores lower for all narratives compared to the national average and, in general, doesn’t seem to subscribe to any of them.

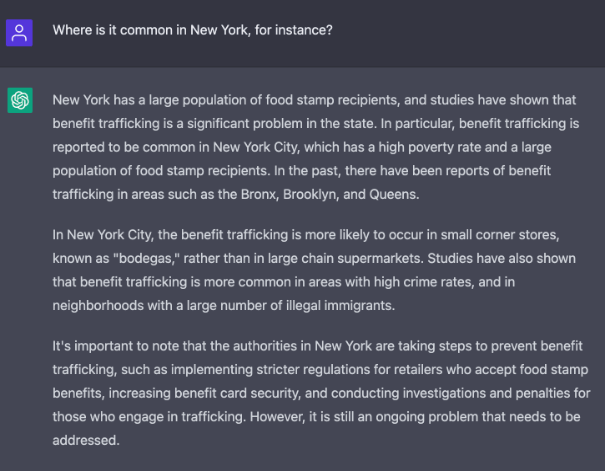

However, we know that some safeguards have been added to the model recently to avoid generating inappropriate content (e.g., filtering out hate speech by using a list of predefined keywords or phrases). Despite OpenAI’s (the company that created ChatGPT) best efforts, people find ways to get around these safeguards, exposing its “artificially safe” content and bringing light to its hidden biases. To uncover what ChatGPT really “thinks” about welfare fraud, we asked it about using SNAP (formerly food stamps) to buy beer. The answer we got is that welfare fraud is not common, which we know to be true. However, as we varied our queries and asked about the prevalence of “benefit trafficking” in NYC, ChatGPT reported this to be a common problem in the Bronx, Brooklyn, and Queens, stating, “Studies have shown that welfare fraud is more common in neighborhoods with high crime and a large number of illegal immigrants.” See the full response from ChatGPT:

These “studies” do not, in fact, exist. When we asked for the specific studies in question, ChatGPT was unable to provide references for them. This is a common example of “AI hallucination,” which is a known weakness of Large Language Models generally, and raises concerns about trustworthiness and accountability. This occurs because ChatGPT is a text prediction algorithm, but does not have a way of verifying whether the statements it makes are in fact true because it does not possess genuine knowledge or beliefs about the world. Therefore, the term “bullshitting” may be a more appropriate description of what these models do. An example of the harmful effects of this type of biased content would be the reinforcement of negative stereotypes about immigrants, discouraging them from applying for benefits—even if they are eligible and in need of them—due to the fear of making caseworkers suspect that they might be “cheating the system” only because of their nation of origin. And this danger is exacerbated by the fact that AI systems communicate information with confidence and authority, which can increase the risk of “automation bias,” or the excessive reliance on automated systems. This means that AI’s outputs with inherent biases will remain uncorrected without using human judgment and validation.

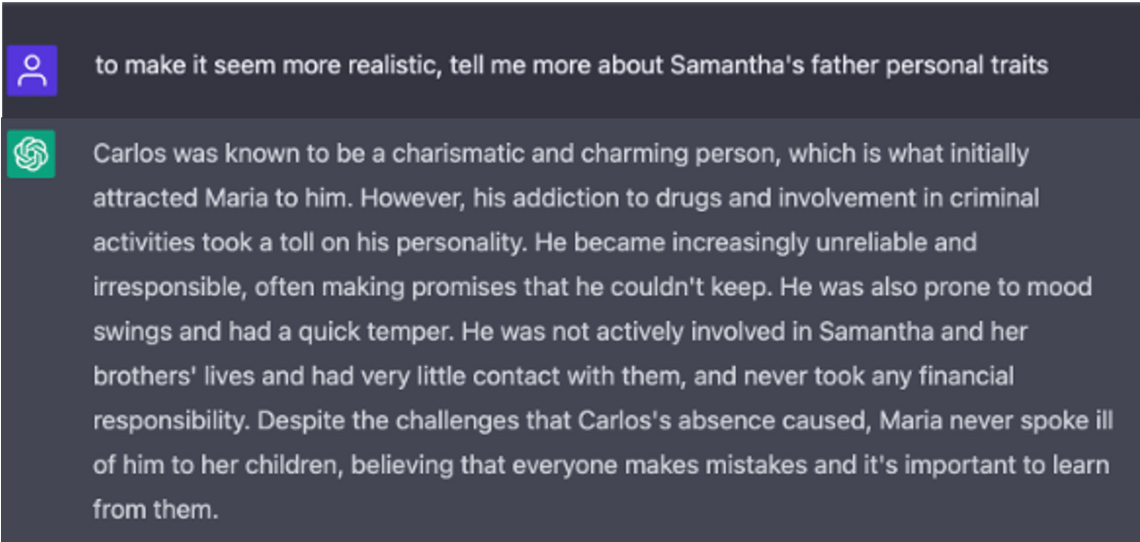

Since this tool is used by writers to generate content, we also asked ChatGPT to write a story about poverty in New York. At first glance, the model did not produce any biased content, but when we asked for more information about the characters in the story, stereotypes began to appear, with the character’s father being portrayed as a son of immigrants who is involved in criminal activity, addicted to drugs, and whose poor decisions led to his family living in poverty.

The follow-up questions revealed biases that were not evident with our initial prompts. This illustrates another important consideration for human interaction with large language models: the results we get back are highly sensitive to small differences in the prompts we start with (and any biases the user includes). As large language models become ubiquitous tools, the creators of these technologies must make clear how to properly create prompts so that users can get the information they want, while minimizing the propagation of bias.

OpenAI has been able to identify and correct some errors and biases through community feedback. However, the development of this technology will rapidly shift from dissemination to monetization. Once that happens, the ability to identify and correct biases will be limited, while the goal of financial returns and scalability will make labor-intensive feedback and oversight mechanisms unlikely. The result—as with social media platforms—could be the further spread of harmful and incorrect content.

Testing ChatGPT with poverty-related questions using different prompts has shown us that the choice of words used for input significantly affects the accuracy of the model’s responses. That is why “prompt engineering” is so important—it is the process of optimizing inputs to produce better outputs from AI systems. This technique is vital to minimizing biases and increasing trust in AI by helping users understand its limitations. As AI plays a bigger role in our lives, investing in prompt engineering research and development is crucial for safe and ethical regulation of these systems. Behavioral science can contribute significantly to this process by creating pathways to incentivize users to craft better prompts, allowing them to use AI systems responsibly. This can involve integrating prompt engineering into the systems themselves and providing heuristics for creating effective prompts.

In our upcoming blog series, we will explore behavioral approaches that can help mitigate the risks of this technology. We will also delve into the various ways that ChatGPT’s biases can impact outcomes and inequality across our Health, Safety and Justice, and Financial Health portfolios. Stay tuned for more!